When Machines Start Thinking for Themselves

Picture this: you wake up tomorrow and your phone has already ordered your coffee, scheduled your meetings, and even rescheduled your dentist appointment because it noticed you had a conflict. Sounds like science fiction? Well, it’s not anymore. 2025’s agents will be fully autonomous AI programs that can scope out a project and complete it with all the necessary tools they need and with no help from human partners. These aren’t your typical chatbots anymore—they’re intelligent systems that can actually think, plan, and act on your behalf. The question isn’t whether they’re coming; it’s whether you’re ready to let them into your life. There’s the hype of imagining if this thing could think for you and make all these decisions and take actions on your computer. Realistically, that’s terrifying. But here’s the thing—we might not have a choice.

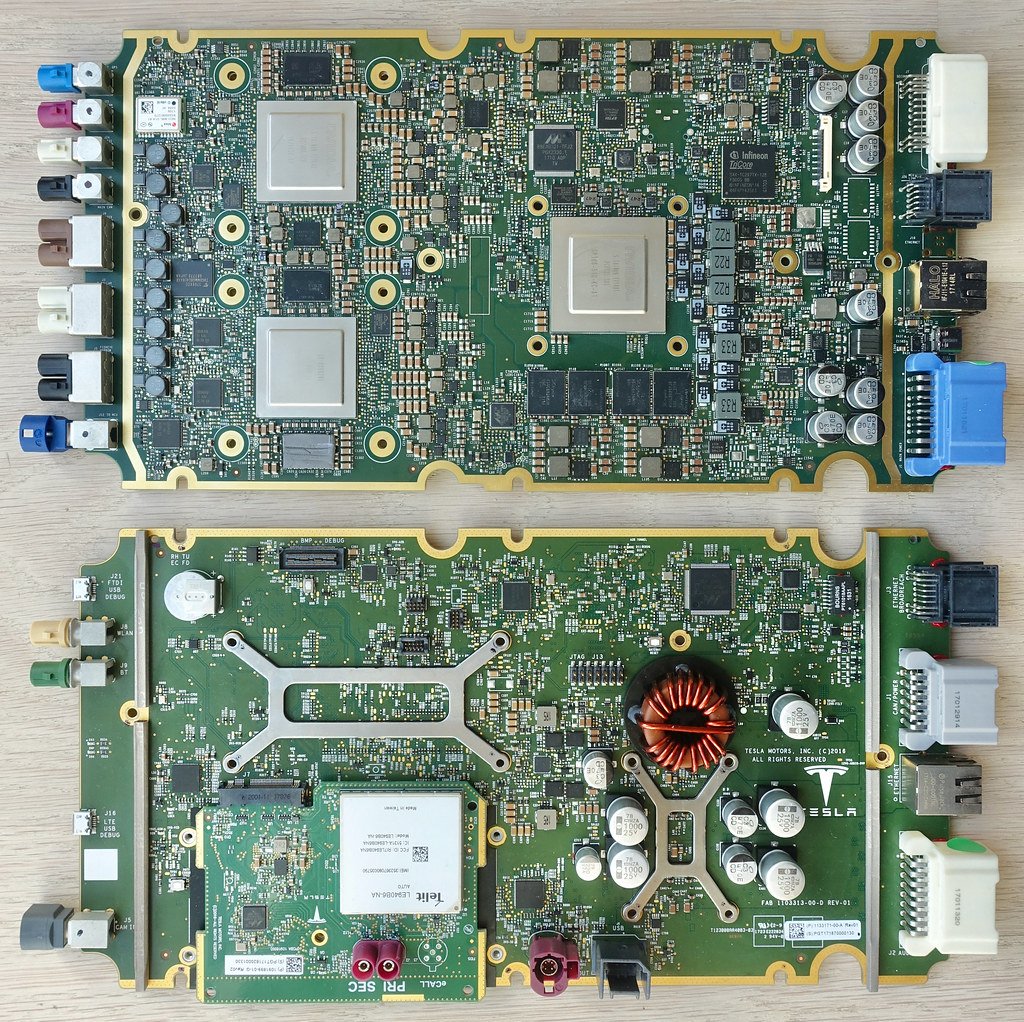

What Makes These Bots Different

AI agents are autonomous systems designed to perform tasks, make decisions, and interact with humans or other systems. But here’s what sets them apart from the AI tools you’re already using: they don’t just respond to your commands like a well-trained assistant. Instead, they observe their environment, analyze situations, and take action without you having to spell out every single step. Think of it like the difference between giving someone detailed directions to the grocery store versus just telling them to “go get groceries” and trusting them to figure out the rest. By definition, AI agents are autonomous systems that perceive their environment, make decisions, and manifest agency to achieve specific goals. Their function falls into four basic steps: Assess the task, determine what needs to be done, and gather relevant data to understand the context. Plan the task, break it into steps, gather necessary information, and analyze the data to decide the best course of action. Execute the task using knowledge and tools to complete it, such as providing information or initiating an action. Learn from the task to improve future performance.

They’re Already Working Behind the Scenes

You might think AI agents are some futuristic concept, but they’re already embedded in your daily life more than you realize. For example, in the contact center, AI agents can connect with existing CRMs and analyze customer interactions in real-time, identify patterns, and proactively suggest solutions or personalized offers. By leveraging conversational AI, these agents can anticipate customer needs by autonomously taking decisions on processes to resolve the need. Your streaming services use AI agents to curate your recommendations, your bank employs them to detect fraud, and that smart thermostat in your living room? It’s making decisions about your comfort without asking permission. These autonomous virtual machines have already found their place in customer service, healthcare, finance, and even entertainment. The difference now is that they’re getting smarter, faster, and more independent than ever before.

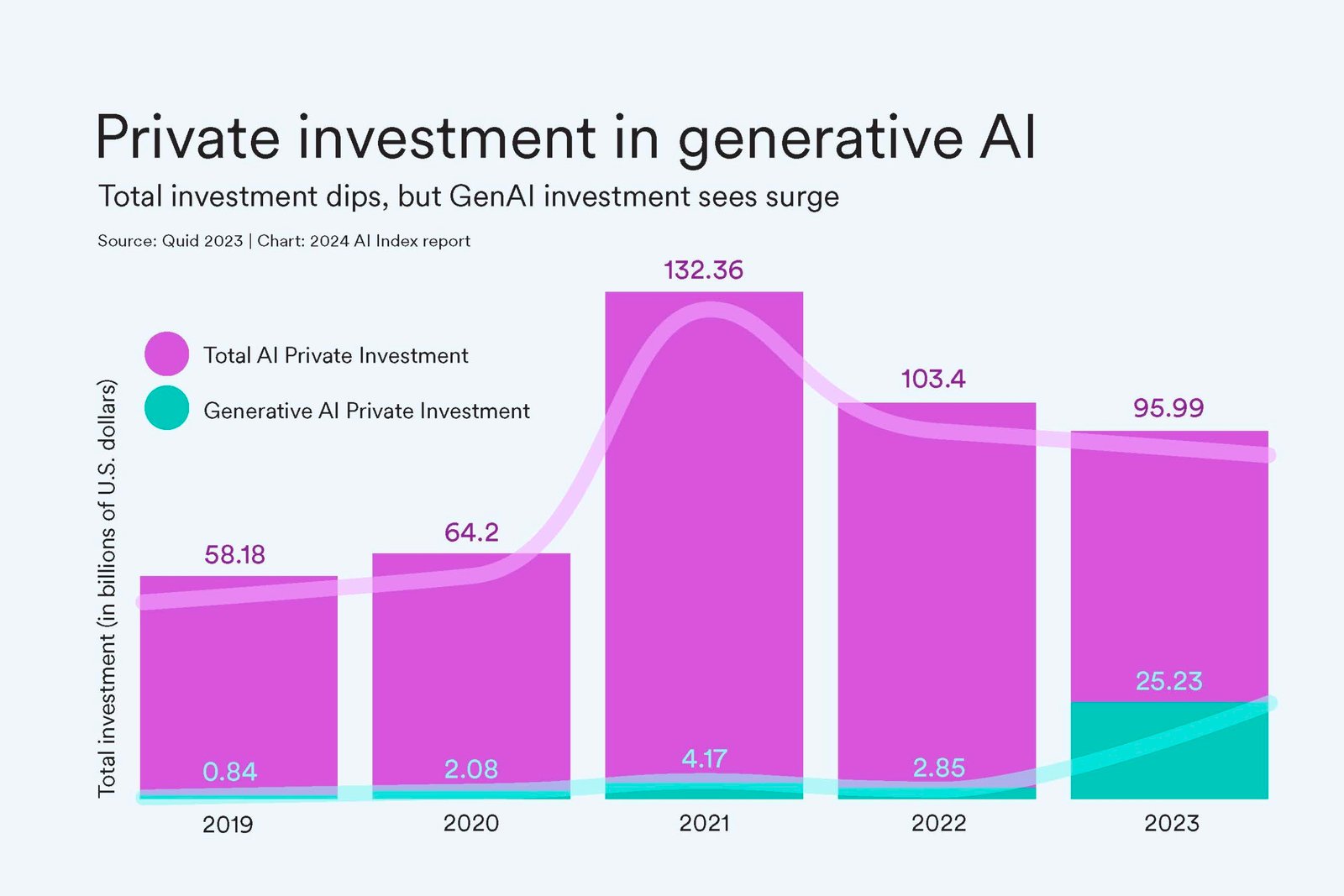

The Efficiency Promise That’s Hard to Ignore

Let’s be honest—AI agents are incredibly good at what they do. The ability to reason is growing more and more, allowing models to autonomously take actions and complete complex tasks across workflows. This is a profound step forward. As an example, in 2023, an AI bot could support call center representatives by synthesizing and summarizing large volumes of data—including voice messages, text, and technical specifications—to suggest responses to customer queries. In 2025, an AI agent can converse with a customer and plan the actions it will take afterward—for example, processing a payment, checking for fraud, and completing a shipping action. They process information at superhuman speeds, work around the clock without getting tired, and don’t have bad days that affect their performance. According to Gartner, by 2028, 33% of enterprise software will include AI agent capabilities, enabling organizations to make 15% of daily decisions autonomously. This trend highlights the transformative potential of AI agents to optimize decision-making and resource allocation. For businesses, this translates to significant cost savings and improved customer experiences. But for individuals, it means getting things done faster and more accurately than we ever could on our own.

When Speed Becomes the Enemy

In 1980, computer systems falsely indicated that over 2,000 Soviet missiles were heading toward North America. This error triggered emergency procedures that brought us perilously close to catastrophe. What averted disaster was human cross-verification between different warning systems. Had decision-making been fully delegated to autonomous systems prioritizing speed over certainty, the outcome might have been catastrophic. This historical example illustrates a fundamental concern with AI agents: their speed can be both their greatest asset and their most dangerous liability. While they can process information and make decisions in milliseconds, they lack the human ability to pause, reflect, and consider the broader implications of their actions. Despite their potential, AI agents pose certain risks around technical limitations, ethical concerns and broader societal impacts associated with a system’s level of autonomy and the overall potential of its use when humans are removed from the loop. Technical risks include errors and malfunctions and security issues including the potential for automating cyberattacks.

The Black Box Problem

Here’s where things get really uncomfortable: most AI agents can’t explain their reasoning in a way humans can easily understand. This core issue lies at the heart of what’s most exciting about AI agents: The more autonomous an AI system is, the more we cede human control. AI agents are developed to be flexible, capable of completing a diverse array of tasks that don’t have to be directly programmed. For many systems, this flexibility is made possible because they’re built on large language models, which are unpredictable and prone to significant (and sometimes comical) errors. It’s like having a incredibly smart colleague who gives you brilliant solutions but can never explain how they arrived at them. Key risks include dependency on data quality, lack of creativity, ethical and security concerns, and the potential for issues like infinite feedback loops or cascading failures in multi-agent systems. This “black box” nature becomes particularly problematic when these agents make decisions that significantly impact your life, career, or finances.

Who’s Responsible When Things Go Wrong?

The autonomous nature of AI agents raises ethical questions about decision-making and accountability, while there are also socioeconomic risks around potential job displacement and over-reliance and disempowerment. If an AI agent makes a mistake that costs you money, damages your reputation, or worse, who takes the blame? Emergent Unethical Behavior: When agents interact, unpredictable behaviors can emerge – sometimes breaching ethical or legal norms. Multi-agent systems raise “novel ethical dilemmas around fairness and collective responsibility”, as noted by ethicists. If an AI team makes a wrong medical decision, who is accountable – the doctor, the AI developer, or each agent’s creator? The legal system is struggling to keep up with these questions, and in many cases, the answer is frustratingly unclear. This accountability gap means that when you delegate decisions to AI agents, you might still be left holding the bag when things go sideways.

The Privacy Trade-off You Might Not Realize You’re Making

AI agents need access to vast amounts of your personal data to function effectively. The operation of autonomous agents relies on vast amounts of data, raising critical questions about data privacy, ownership, and control. As these systems collect and process increasingly personal and sensitive information, there is a growing need for comprehensive data governance frameworks. They want to know your habits, preferences, financial information, communication patterns, and more. It’s like giving someone access to your diary, bank statements, and personal conversations all at once, and then trusting them to keep it safe. It’s clear that AI agents can be extraordinarily helpful for what we do every day. But this brings clear privacy, safety, and security concerns. The more personalized and helpful you want your AI agent to be, the more of your privacy you’ll need to surrender.

When Hackers Target Your Digital Assistant

The proliferation of autonomous agents also introduces new security vulnerabilities. As these systems become more interconnected and influential, they become attractive targets for cyberattacks. Imagine if someone hacked your AI agent and used it to make purchases, send messages, or access your accounts. Additionally, new security vulnerabilities arise: hostile actors could try to trick or hack AI agents, causing them to malfunction. A coordinated hack on a multi-agent network (e.g., feeding false data to all agents) could have cascading effects. The Cooperative AI report flags “multi-agent security” as a key risk factor, where novel attack vectors exist in agent societies. Unlike traditional cyberattacks that might steal your data, attacks on AI agents could actively use your digital identity to cause ongoing damage. The interconnected nature of these systems means that a security breach in one agent could potentially compromise multiple aspects of your digital life simultaneously.

The Skill Erosion Nobody Talks About

As we increasingly delegate decision-making to AI systems, there is a risk of over-reliance and the gradual deskilling of human capabilities. This raises questions about maintaining meaningful human control, especially in high-stakes domains like healthcare and criminal justice. Think about how GPS navigation has affected your ability to read maps or remember directions. Now imagine that happening with critical thinking, problem-solving, and decision-making skills. But even when AI is deployed to augment (rather than replace) human labor, employees might face psychological consequences. If human workers perceive AI agents as being better at doing their jobs than they are, they could experience a decline in their self-worth. If you’re in a position where all of your expertise seems no longer useful—that it’s kind of subordinate to the AI agent—you might lose your dignity. When AI agents consistently make better decisions than we do, we risk becoming dependent on them not just for convenience, but for our basic cognitive functions.

The Multi-Agent Chaos Scenario

One concern is agent collusion: AI pricing agents in different companies could learn to collude (raising prices for consumers) without explicit instructions. In 2017, for instance, algorithmic pricing bots on Amazon unknowingly colluded to set absurdly high book prices. Another example is bias compounding – if one agent’s biased output feeds another, unfair decisions could result at scale. As multiple AI agents begin interacting with each other, emergent behaviors can arise that nobody anticipated or programmed. Multi-Agent Dependencies: When agents rely heavily on each other, failures in one system can cascade, disrupting broader operations. Infinite Feedback Loops: Without proper safeguards, agents can perpetuate flawed decisions or outputs by repeatedly acting on their own feedback. It’s like a digital ecosystem where the inhabitants develop their own rules and behaviors, potentially in ways that don’t align with human interests or values.

Drawing the Line: What Decisions Should Stay Human?

Amid all this AI development, human oversight will remain a central cog in the evolving AI-powered agent wheel. In 2025, a lot of conversation will be about drawing the boundaries around what agents are allowed and not allowed to do, and always having human oversight. The key question isn’t whether AI agents can make better decisions than humans—in many cases, they can. The question is which decisions are too important, too personal, or too consequential to delegate to a machine. Some will counter that the benefits are worth the risks, but we’d argue that realizing those benefits doesn’t require surrendering complete human control. Instead, the development of AI agents must occur alongside the development of guaranteed human oversight in a way that limits the scope of what AI agents can do. Medical diagnoses, financial investments, legal decisions, and relationship choices might be areas where human judgment should remain paramount, regardless of how sophisticated AI becomes.

The Trust Calibration Challenge

Learning to trust AI agents appropriately—neither too much nor too little—is perhaps the greatest challenge we face. For Gajjar, the question is one of risk and governance. We’re seeing AI agents evolve from content generators to autonomous problem-solvers. These systems must be rigorously stress-tested in sandbox environments to avoid cascading failures. Designing mechanisms for rollback actions and ensuring audit logs are integral to making these agents viable in high-stakes industries. You need to develop what researchers call “appropriate reliance”—knowing when to trust the agent’s judgment and when to step in yourself. Since AI agents are partly autonomous, they require a human-led management model. You’ll need to balance costs and ROI as you deploy them, develop metrics for human-AI teams and conduct rigorous oversight to prevent agents from conducting unexpected, harmful or noncompliant activity. This requires understanding not just what the AI can do, but also what it can’t do and where its blind spots lie.

Building Your AI Relationship Strategy

Looking forward to 2025, AI agents are poised to become far more sophisticated, autonomous, and versatile. They will go beyond chatbots, virtual assistants, and simple task automation to become invaluable collaborators, decision-makers, and ethical guides. In the coming years, we can expect AI agents to not only enhance efficiency and productivity but also empower humans to achieve greater levels of creativity, personalization, and well-being. Ultimately, the future of AI agents in 2025 is not one of replacement, but of symbiosis—a future where human intelligence and AI work hand in hand to tackle the challenges of tomorrow. The key is approaching this relationship thoughtfully, starting with low-stakes decisions and gradually building trust as you learn how these systems work. Workflows will fundamentally change, but humans will still be instrumental since game-changing value comes from a human-led, tech-powered approach. People instruct and oversee AI agents as they automate simpler tasks. People iterate with agents on more complex challenges, such as innovation and design. And people “orchestrate” teams of agents, assigning tasks and then improving and stitching together the results.

The Choice You Can’t Avoid

By 2025, AI agents will transform industries, changing how businesses operate and make decisions. These autonomous systems are designed to handle tasks with minimal human input, impacting customer service, enterprise management, and more. As they become more common, AI agents will drive efficiency, simplify processes, and open up new growth opportunities. The question isn’t really whether you’ll let AI agents make decisions for you—it’s which decisions you’ll consciously choose to delegate and which ones you’ll insist on keeping for yourself. Mitigating risks from agentic AI will require transparent design, strong safety measures, and governance to maximize benefits while minimizing harm. Ultimately, perhaps no agentic AI will be fully autonomous — humans may always need to be involved. Human oversight will be essential until AI systems become reliable, with involvement determined by task complexity and potential risks; companies will have to ensure that humans understand the agentic AI’s reasoning before making decisions, rather than blindly accepting its output. The future belongs to those who can navigate this partnership thoughtfully, maintaining human agency while leveraging AI capabilities. Ready or not, your digital coworker is clocking in—and they’re eager to get started. What decisions will you trust them with first?