The Digital Deputies: How AI Is Changing Policing Forever

Artificial Intelligence is no longer a futuristic fantasy in the world of law enforcement—it’s the reality shaping police departments across continents. From bustling urban centers to quiet rural towns, AI-driven tools are now standard-issue gear. Law enforcement agencies are using machine learning to analyze crime patterns, process evidence, and even deploy officers more efficiently. Amidst these advances, a critical debate rages: can the same technology that promises to bring justice also risk deepening prejudice? As agencies embrace AI, the need for careful scrutiny and public dialogue has never been more urgent. AI’s power to sift through mountains of data in seconds is both awe-inspiring and intimidating, raising questions about accountability, transparency, and the rights of ordinary citizens. The future of justice could be shaped not just by human hands, but by code and algorithms—so, whose hands are really on the scales?

The Rise of Predictive Policing: Crystal Ball or Pandora’s Box?

Predictive policing is a stunning example of AI’s potential and peril. By drawing on years of crime reports, arrests, and public complaints, predictive algorithms promise to “see” where crime might strike next. According to a 2022 National Institute of Justice report, cities that adopted these systems reported a 20% dip in property crimes—a number that sounds almost magical. Yet, beneath this optimism lurks a troubling truth: if AI is trained on biased historical data, it can reinforce the same injustices it aims to fix. Critics argue that neighborhoods historically over-policed may be unfairly targeted again, creating a cycle of heightened surveillance and mistrust. While some departments celebrate AI’s ability to allocate resources more efficiently, others warn that predictive policing could become a self-fulfilling prophecy, with certain communities always in the crosshairs. Is AI really predicting crime, or just repeating the past?

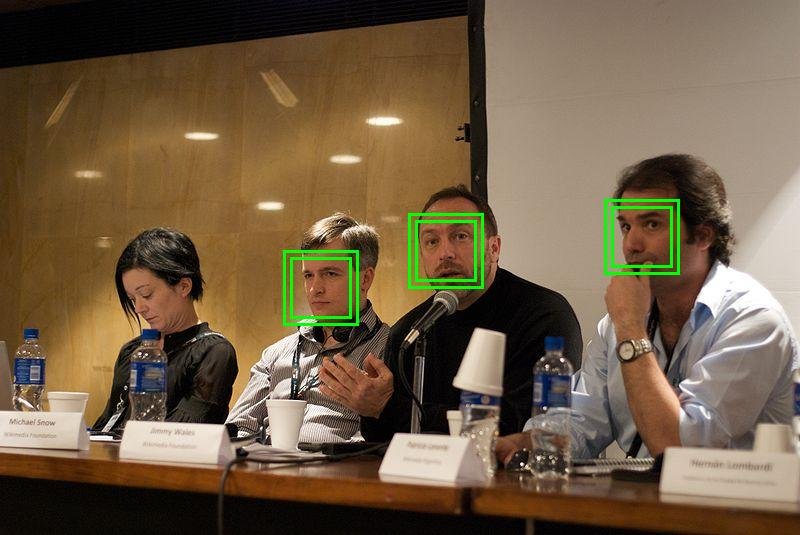

Facial Recognition Technology: A Double-Edged Sword

Facial recognition technology (FRT) has become a staple of modern policing, deployed at airports, stadiums, and city streets. Its promise is clear: rapid identification of suspects, missing persons, and even potential threats before they escalate. But with great power comes great controversy. A 2023 MIT Media Lab study revealed that FRT misidentifies people of color up to 34% more often than their white counterparts. This isn’t just a technical glitch—it can mean wrongful arrests, shattered reputations, and deep scars in already vulnerable communities. As high-profile mistakes make headlines, public trust in both the technology and the institutions using it takes a hit. Law enforcement agencies find themselves at a crossroads: do they double down on FRT, or pause to address its glaring flaws? The stakes couldn’t be higher, as every false positive can mean a real person’s life turned upside down.

AI and Surveillance: Security’s Watchful Eye or Big Brother’s Gaze?

Surveillance powered by AI is everywhere, quietly watching over city parks, highways, and public squares. Smart cameras and drones are being deployed with promises of keeping citizens safe, catching criminals in the act, and deterring would-be offenders. But as the lens widens, so does the unease. A 2023 survey found that 62% of Americans are worried about government surveillance using AI, highlighting a growing fear of living under constant watch. Privacy advocates warn that such surveillance doesn’t just catch bad actors—it can chill free speech, suppress protests, and erode the very freedoms it’s meant to protect. Balancing the benefits of enhanced security with the right to privacy is a dilemma that law enforcement and lawmakers are struggling to solve. The question remains: how much are we willing to trade for a sense of safety?

Bias in AI Algorithms: When Justice Isn’t Blind

One of AI’s greatest challenges is its hidden bias. Every algorithm learns from data, and if that data carries traces of systemic prejudice, the outcomes can be devastatingly unfair. A 2021 study from the AI Now Institute exposed how some law enforcement AI tools, trained on skewed data, can lead to discriminatory policing practices. This systemic bias doesn’t always announce itself loudly—it can seep subtly into decisions about who gets stopped, searched, or surveilled. Calls for transparency and regular audits are growing louder, with activists and technologists urging agencies to open the “black box” of AI. The demand is simple: algorithms must be subject to the same scrutiny as any other tool of justice. Only then can the promise of fair and equal treatment move from slogan to reality.

The Role of AI in Criminal Investigations: Solving Mysteries at Warp Speed

AI is revolutionizing the way detectives crack cases. With machine learning, evidence can be processed at breathtaking speed—DNA samples matched, fingerprints analyzed, and digital trails reconstructed, often in hours instead of days. Some agencies report that AI-driven forensic tools have cut analysis times by up to 50%, allowing investigators to pursue leads that might have otherwise gone cold. Yet, this efficiency can be a double-edged sword. Overreliance on algorithmic outputs may sideline the experience and intuition of seasoned detectives, leading to blind faith in code over critical thinking. The challenge is to find a balance: harnessing AI’s power without surrendering human judgment. As investigations become more data-driven, the need for oversight and healthy skepticism only grows.

Ethical Considerations in AI Deployment: Drawing the Moral Line

Deploying AI in law enforcement is not just a technical task—it’s an ethical minefield. The questions come thick and fast: Who is accountable when an algorithm makes a mistake? How can agencies ensure transparency in decisions guided by opaque machine learning models? A 2023 report by the Ethical AI Initiative stresses the urgent need for guidelines and oversight. Without clear standards, the risk of misuse or abuse looms large. Law enforcement agencies face mounting pressure to develop robust ethical frameworks that prioritize civil rights and public interest. As AI becomes more embedded in policing, the importance of ethics grows ever more pressing, demanding vigilance from both developers and decision-makers.

Community Engagement and AI Transparency: Building Trust or Fueling Suspicion?

No technology exists in a vacuum, especially not in the emotionally charged environment of law enforcement. Community engagement is emerging as a linchpin for successful AI adoption. A 2022 survey found that 75% of respondents believe community input should be required before deploying AI policing tools. Transparent communication about how, when, and why AI is used can build bridges between departments and the neighborhoods they serve. Conversely, secrecy breeds suspicion, with fears of surveillance and discrimination fueling public backlash. Law enforcement agencies are starting to host forums, workshops, and listening sessions to demystify AI for the public. The goal is clear: technology must serve the community, not alienate it.

Future Trends in AI and Law Enforcement: What’s Next on the Horizon?

The frontier of AI in policing is constantly shifting, with new technologies emerging at a dizzying pace. One notable trend is the integration of AI with blockchain to create tamper-proof evidence chains and secure data management systems. Machine learning is also being fine-tuned to aid in critical decision-making, from resource allocation to threat assessment. As wearable tech and real-time analytics become more sophisticated, the line between science fiction and reality continues to blur. Yet, every innovation brings new ethical and practical questions. Agencies must weigh the benefits of efficiency and accuracy against the risks of overreach and unintended consequences. The road ahead promises both dazzling breakthroughs and daunting challenges.

Global Perspectives: How Different Countries Approach AI in Policing

AI’s role in law enforcement varies dramatically around the globe, shaped by local laws, culture, and public attitudes. In some countries, like the United Kingdom and China, AI-powered surveillance and facial recognition are rolled out on a massive scale, sparking heated debates about privacy. Meanwhile, nations such as Germany and Canada are treading more cautiously, enacting strict regulations and conducting public consultations before deploying new technologies. These differences highlight the importance of context—what works in one place may be unacceptable in another. As global conversations about digital rights and civil liberties evolve, countries look to each other for both inspiration and cautionary tales.

Training the Machines: The Human Side of AI in Law Enforcement

Behind every AI system is a team of engineers, analysts, and law enforcement professionals tasked with training and maintaining the technology. The quality of their work can determine whether AI serves justice or perpetuates bias. Continuous training, regular audits, and cross-disciplinary collaboration are essential to keeping algorithms in check. Some agencies are investing in partnerships with universities and advocacy groups to improve the accuracy and fairness of their tools. The human element remains crucial—AI is only as good as the people who build, test, and refine it. As the technology evolves, so too must the skills and sensibilities of those who oversee it.

Voices from the Field: What Officers and Citizens Are Saying

The introduction of AI into policing has sparked passionate responses from officers and citizens alike. Some officers praise AI for streamlining paperwork and helping them respond faster to emergencies. Others express concern about becoming too dependent on technology or losing the personal touch with the community. Citizens, meanwhile, are divided—some feel safer knowing crimes can be solved more quickly, while others fear increased surveillance and the risk of wrongful targeting. These real-world perspectives underscore the complexity of the issue. The future of AI in law enforcement will hinge not just on technical solutions, but on listening to the lived experiences of those on the front lines.

In Search of Fairness: Can AI Deliver Real Justice?

The debate over AI in law enforcement is far from settled. While technology has the potential to revolutionize policing for the better, it also brings the risk of amplifying old prejudices in new, digital forms. The ultimate question is not just whether AI can make policing smarter or faster, but whether it can make it fairer. The answer will depend on our willingness to confront uncomfortable truths, demand transparency, and insist that civil rights keep pace with technological progress. The pursuit of justice in the age of AI is a journey—one that demands vigilance, empathy, and the courage to question even our smartest machines.